Grid GPUs - AHV Test

GPU-accelerated computing is the use of a graphics processing unit (GPU) together with a CPU to accelerate scientific, analytics, engineering, consumer, and enterprise applications. GPU-accelerated computing enhances application performance by offloading compute-intensive portions of the application to the GPU, while the remainder of the code still runs on the CPU. Architecturally, while a CPU has only few cores and handles few hundred threads at a time, a GPU is composed of hundreds of cores that can handle thousands of threads simultaneously and render a flawless rich graphics experience.

Now, imagine if you could access your GPU-accelerated applications, even those requiring intensive graphics power, anywhere on any device. NVIDIA GRID makes this possible. With NVIDIA GRID, a virtualized GPU designed specifically for virtualized server environments, data center managers can bring true PC graphics-rich experiences to users.

The NVIDIA GRID GPUs will be hosted in enterprise data centers and allow users to run virtual desktops or virtual applications on multiple devices connected to the internet and across multiple operating systems, including PCs, notebooks, tablets and even smartphones. Users can utilize their online-connected devices to enjoy the GPU power remotely.

In VDI/virtualized server environments, the NVIDIA GRID delivers GPU resources to virtual desktops/VMs. This way, graphics can be rendered on a virtual machine’s (VM’s) host server rather than on a physical end-point device. This technology now makes it possible to use virtual desktop technology to support users accessing graphics intensive workloads. There are two modes of making GPU resources available to virtual desktops:

-

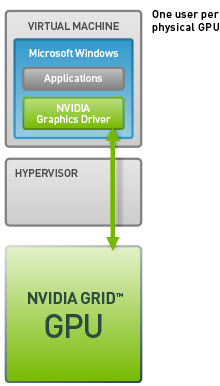

Dedicated GPU or GPU Pass-through Technology: NVIDIA GPU pass-through technology lets you create a virtual workstation that gives users all the benefits of a dedicated graphics processor at their desk. By directly connecting a dedicated GPU to a virtual machine through the hypervisor, you can now allocate the full GPU and graphics memory capability to a single virtual machine without any resource compromise.

-

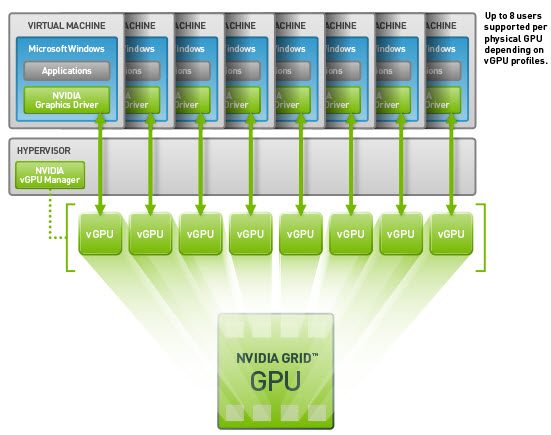

Shared GPU or Virtual GPU (vGPU) Technology: GRID vGPU is the industry’s most advanced technology for sharing true GPU hardware acceleration between multiple virtual desktops—without compromising the graphics experience. With GRID vGPU technology, the graphics commands of each virtual machine are passed directly to the GPU, without translation by the hypervisor. This allows the GPU hardware to be time-sliced to deliver improved shared virtualized graphics performance. The GRID vGPU manager allows for management of user profiles. IT managers can assign the optimal amount of graphics memory and deliver a customized graphics profile to meet the specific needs of each user. Every virtual desktop has dedicated graphics memory, just like they would at their desk, so they always have the resources they need to launch and run their applications.

In GPU-enabled virtualized environments, if users to VMs/virtual desktops complain of slowness when accessing graphic applications, administrators must be able to instantly detect the slowness and figure out its root-cause – is it because adequate GPU resources are not allocated to the VMs/virtual desktops? Is it because of excessive utilization of GPU memory and processing resources by a few VMs/virtual desktops? Or is it because the GPU clock frequencies are improperly set for one/more GPUs used by a VM/virtual desktop?

-

The VMs/virtual desktops have been allocated enough vGPUs;

-

The vGPUs are configured with enough graphics memory;

-

The vGPU clock frequencies are rightly set;

-

The GPU technology in use – i.e., the GPU Pass-through technology or the Shared vGPU technology – is ideal for the graphics processing requirements of the environment;

Measures to right-size the host and fine-tune its GPU configuration can be initiated based on the results of this analysis. This is exactly what the GPU – OS test helps administrators achieve!

This test tracks the rate at which each vGPU processes frames, and thus pinpoints those vGPUs that are experiencing a processing bottleneck. The test also monitors the memory usage on each vGPU and helps administrators identify the vGPUs where memory is over-used. The test also reveals how each of these VMs/virtual desktops use each of the allocated vGPUs, thus enabling administrators to determine whether/not the allocated vGPUs are sufficient for the current and future processing requirements of the VMs/virtual desktops. In the process, the test also pinpoints those VMs/virtual desktops that are over-utilizing the graphical processors assigned to them. Also, to make sure that the assigned vGPUs are functioning without a glitch, the power consumption, temperature, and clock frequency of each vGPU is also checked at periodic intervals, so that abnormalities can be quickly detected.

Note:

This test will report metrics for only those Windows VMs where the nvwmi is installed. The steps for installing nvwmi and configuring the eG agent to use it have been detailed in the Configuring the eG Agent for Monitoring the Usage of NVIDIA GPU document.

Target of the test : A Nutanix AHV server

Agent deploying the test : A remote agent

Outputs of the test : One set of results every vGPU assigned to each Windows VM on the Nutanix AHV server being monitored.

| Parameter | Description |

|---|---|

|

Test Period |

How often should the test be executed |

|

Host |

The host for which the test is to be configured. |

|

Port |

The port at which the specified host listens. By default, this is NULL |

|

Prism Element IP |

If the eG manager had discovered the target Nutanix AHV server by connecting to the Nutanix Prism Element, then the IP address of the Nutanix Prism Element server used for discovering this Nutanix AHV server would be automatically displayed against the Prism Element IP parameter; similarly, the Prism Element User and Prism Element Password text boxes will be automatically populated with the Prism Element user credentials, using which Nutanix AHV discovery was performed. If this Nutanix AHV server has not been discovered using the Nutanix Prism Element, but you still want to monitor the Nutanix AHV server via the Prism Element, then select the IP address of the Prism Element server that you wish to use for monitoring the Nutanix AHV server from the Prism Element IP list. By default, this list is populated with the IP address of all Nutanix Prism Element hosts that were added to the eG Enterprise system at the time of discovery. Upon selection, the Prism Element user and Prism Element Password that were pre-configured for that Nutanix Prism Element will be automatically displayed against the respective text boxes. On the other hand, if the IP address of the Prism Element server of interest to you is not available in the list, then, you can add the details of the Prism Element server on-the-fly, by selecting the Other option from the Prism Element IP list. This will invoke the MANAGER DISCOVERY - VIRTUAL PLATFORM SETTINGS page. Refer to the Configuring eG Enterprise to Monitor Nutanix AHV topic for details on how to use this page. |

|

Prism Element User, Prism Element Password and Confirm Password |

If the eG manager had discovered the target Nutanix AHV server by connecting to the Nutanix Prism Element, then the IP address of the Nutanix Prism Element server used for discovering this Nutanix AHV server would be automatically displayed against thePrism Element IP parameter; similarly, the Prism Element User and Prism Element Password text boxes will be automatically populated with the Prism Element user credentials, using which Nutanix AHV discovery was performed. If this Nutanix AHV server has not been discovered using the Nutanix Prism Element, but you still want to monitor the Nutanix AHV server via the Prism Element, then select the IP address of the Prism Element server that you wish to use for monitoring the Nutanix AHV server from the Prism Element IP list. By default, this list is populated with the IP address of all Nutanix Prism Element hosts that were added to the eG Enterprise system at the time of discovery. Upon selection, the Prism Element User and Prism Element Password that were pre-configured for that Prism Element server will be automatically displayed against the respective text boxes. On the other hand, if the IP address of the Prism Element server of interest to you is not available in the list, then, you can add the details of the Prism Element server on-the-fly, by selecting the Other option from the Prism Element IP list. This will invoke the MANAGER DISCOVERY - VIRTUAL PLATFORM SETTINGS page. Refer to the Configuring eG Enterprise to Monitor Nutanix AHV topic for details on how to use this page. |

|

SSL |

By default, the Nutanix Prism Element server is SSL-enabled. Accordingly, the SSL flag is set to Yes by default. This indicates that the eG agent will communicate with the Prism Element server via HTTPS by default. |

|

WebPort |

By default, the Nutanix Prism Element server listens on port 9440. This implies that while monitoring a Nutanix AHV server via the Prism Element server, the eG agent connects to port 9440. |

|

Exclude VMs |

Administrators of some virtualized environments may not want to monitor some of their less-critical VMs - for instance, VM templates - both from 'outside' and from 'inside'. The eG agent in this case can be configured to completely exclude such VMs from its monitoring purview. To achieve this, provide a comma-separated list of VMs to be excluded from monitoring in the Exclude VMs text box. Instead of VMs, VM name patterns can also be provided here in a comma-separated list. For example, your Exclude VMs specification can be: *xp,*lin*,win*,vista. Here, the * (asterisk) is used to denote leading and trailing spaces (as the case may be). By default, this parameter is set to none indicating that the eG agent obtains the inside and outside views of all VMs on a virtual host by default. By providing a comma-separated list of VMs/VM name patterns in the Exclude VMs text box, you can make sure the eG agent stops collecting 'inside' and 'outside' view metrics for a configured set of VMs. |

|

Ignore VMs Inside View |

Administrators of some high security Hyper-V environments might not have permissions to internally monitor one/more VMs. The eG agent can be configured to not obtain the 'inside view' of such ‘inaccessible’ VMs using the Ignore VMs Inside View parameter. Against this parameter, you can provide a comma-separated list of VM names, or VM name patterns, for which the inside view need not be obtained. For instance, your Ignore VMs Inside View specification can be: *xp,*lin*,win*,vista. Here, the * (asterisk) is used to denote leading and trailing spaces (as the case may be). By default, this parameter is set to none indicating that the eG agent obtains the inside view of all VMs on a Hyper-V host by default. Note: While performing VM discovery, the eG agent will not discover the operating system of the VMs configured in the Ignore VMs Inside View text box. |

|

Ignore WINNT |

By default, the eG agent does not support the inside view for VMs executing on Windows NT operating systems. Accordingly, the Ignore WINNT flag is set to Yes by default. |

|

Inside View Using |

By default, this test obtains the “inside view” of VMs using the eG VM Agent. Accordingly, the Inside view using flag is set to eG VM Agent by default. The eG VM Agent is a piece of software, which should be installed on every VM on a hypervisor. Every time the eG agent runs this test, it uses the eG VM Agent to pull relevant 'inside view' metrics from each VM. Once the metrics are collected, the eG agent then communicates with each VM agent and pulls these metrics, without requiring administrator privileges. Refer to Configuring the Remote Agent to Obtain the Inside View of VMs for more details on the eG VM Agent. |

|

Domain, Admin User, and Admin Password, and Confirm Password |

By default, these parameters are set to none. This is because, by default, the eG agent collects 'inside view' metrics using the eG VM agent on each VM. Domain administrator privileges need not be granted to the eG agent if it uses this default approach to obtain the 'inside view' of Windows VMs. |

|

Report By User |

While monitoring a Nutanix AHV server, the Report By Userflag is set to No by default, indicating that by default, the guest operating systems on the AHV server are identified using the hostname specified in the operating system. On the other hand, while monitoring AHV desktop environments, this flag is set to Yes by default; this implies that in case of VDI servers, by default, the guests will be identified using the login of the user who is accessing the guest OS. In other words, in VDI environments, this test will, by default, report measures for every username_on_virtualmachinename. |

|

Report Powered OS |

This flag becomes relevant only if thereport by user flagis set to ‘Yes’. If the Report Powered OS flag is set to Yes (which is the default setting), then this test will report measures for even those VMs that do not have any users logged in currently. Such guests will be identified by their virtualmachine name and not by the username_on_virtualmachinename. On the other hand, if the Report Powered OS flag is set to No, then this test will not report measures for those VMs to which no users are logged in currently. |

|

Ignore Services |

Provide a comma-separated list of services that need to be ignored while monitoring. |

|

Measurement |

Description |

Measurement Unit |

Interpretation |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

Cooler rate: |

Indicates the percentage of device cooler rate for this GPU of this VM/virtual desktop. |

Percent |

|

||||||||

|

Virtual GPU compute utilization: |

Indicates the proportion of time over the past sample period during which one or more kernels was executing on this vGPU of this VM/virtual desktop. |

Percent |

A value close to 100% indicates that the GPU of the VM/virtual desktop is busy processing graphic requests almost all the time. In a Shared vGPU environment a vGPU may be in use almost all the time, if the VM/virtual desktop it is allocated to runs graphic-intensive applications. A resource-hungry VM/virtual desktop can impact the performance of other VMs/virtual desktops on the same server. If you find that only a single VM/virtual desktop has been consistently hogging the GPU resources, you may want to switch to the Dedicated GPU mode, so that excessive GPU usage by that VM/virtual desktop has no impact on the performance of other VMs/virtual desktops on that host. If all GPUs assigned to a VM/virtual desktop are found to be busy most of the time, you may want to consider allocating more GPU resources to that VM/virtual desktop. |

||||||||

|

Power consumption: |

Indicates the current power usage of this GPU allocated to this VM/virtual desktop. |

Watts |

A very high value is indicative of excessive power usage by the GPU. Compare the value of this measure across GPUs to know which VM’s/virtual desktop’s GPU is consuming power excessively. |

||||||||

|

Core GPU temperature: |

Indicates the current temperature of this GPU allocated to this VM/virtual desktop. |

Celsius |

Ideally, the value of this measure should be low. A very high value is indicative of abnormal GPU temperature. Compare the value of this measure across VMs/virtual desktops to identify that VM/virtual desktop for which GPU temperature soared since the last reading. To reduce the heat output of the GPU and consequently its temperature, you may consider performing underclocking. For instance, it is possible to set a GPU to run at lower clock rates when performing everyday tasks (e.g. internet browsing and word processing), thus allowing the card to operate at lower temperature and thus lower, quieter fan speeds. |

||||||||

|

Total framebuffer memory: |

Indicates the total size of frame buffer memory of this GPU of this VM/virtual desktop.

|

MiB |

Frame buffer memory refers to the memory used to hold pixel properties such as color, alpha, depth, stencil, mask, etc. |

||||||||

|

Used frame buffer memory: |

Indicates the amount of frame buffer memory on-board this GPU that is being used by this VM/virtual desktop. |

MiB |

Frame buffer memory refers to the memory used to hold pixel properties such as color, alpha, depth, stencil, mask, etc. Properties like the screen resolution, color level, and refresh speed of the frame buffer can impact graphics performance. Also, if Error-correcting code (ECC) is enabled, the available frame buffer memory may be decreased by several percent. This is because, ECC uses up memory to detect and correct the most common kinds of internal data corruption. Moreover, the driver may also reserve a small amount of memory for internal use, even without active work on the GPU; this too may impact frame buffer memory. For optimal graphics performance therefore, adequate frame buffer memory should be allocated to the VM/virtual desktop. |

||||||||

|

Free frame buffer memory: |

Indicates the amount of frame buffer memory on-board this GPU that is yet to be used by this VM/virtual desktop. |

MiB |

|

||||||||

|

Frame buffer memory utilization: |

Indicates the percentage of total frame buffer memory that has been allocated to this VM/virtual desktop. |

Percent |

Ideally, the value of this measure should be low. A value close to 100% is indicative of excessive usage of frame buffer memory. Properties like the screen resolution, color level, and refresh speed of the frame buffer can impact graphics performance. Also, if Error-correcting code (ECC) is enabled, the frame buffer memory usage will increase by several percent. This is because, ECC uses up memory to detect and correct the most common kinds of internal data corruption. Moreover, the driver may also reserve a small amount of memory for internal use, even without active work on the GPU; this too may impact frame buffer memory usage. For optimal graphics performance therefore, adequate frame buffer memory should be allocated to the VM/virtual desktop. |

||||||||

|

Virtual memory: |

Indicates the virtual memory of this GPU device of this VM/virtual desktop. |

MB |

|

||||||||

|

GPU memory utilization: |

Indicates the percentage of time over the past sample period during which memory on this GPU was read/written on by this VM/virtual desktop. |

Percent |

A value close to 100% is a cause for concern as it indicates that the graphics memory on a GPU is almost always in use. In a Shared vGPU environment, memory may be consumed all the time if one/more VMs/virtual desktops utilize the graphics memory excessively and constantly. If you find that only a single VM/virtual desktop has been consistently hogging the graphic memory resources, you may want to switch to the Dedicated GPU mode, so that excessive memory usage by that VM/virtual desktop has no impact on the performance of other VMs/virtual desktops on that host. If the value of this measure is high almost all the time for most of the GPUs, it could mean that the VM/virtual desktop is not sized with adequate graphics memory. |

||||||||

|

Total BAR1 memory: |

Indicates the total size of the BAR1 memory of this GPU allocated to this VM/virtual desktop. |

MiB |

BAR1 is used to map the frame buffer (device memory) so that it can be directly accessed by the CPU or by 3rd party devices (peer-to-peer on the PCIe bus). |

||||||||

|

Used BAR1 memory: |

Indicates the amount of BAR1 memory on this GPU that is being used by this VM/virtual desktop. |

MiB |

For better user experience with graphic applications, enough BAR1 memory should be available to the VM/virtual desktop. |

||||||||

|

Free BAR1 memory: |

Indicates the total size of BAR1 memory of this GPU that is still not used by this VM/virtual desktop. |

MiB |

|

||||||||

|

BAR1 memory utilization: |

Indicates the percentage of the allocated BAR1 memory that is currently being utilized by this VM/virtual desktop. |

Percent |

A value close to 100% is indicative of excessive usage of the BAR1 memory by a VM/virtual desktop. For best graphics performance, this value should be low. To ensure that, adequate BAR1 memory should be allocated to the VM. |

||||||||

|

Power management: |

Indicates whether/not power management is enabled for this GPU of this VM/virtual desktop. |

|

Many NVIDIA graphics cards support multiple performance levels so that the server can save power when full graphics performance is not required. The default Power Management Mode of the graphics card is Adaptive. In this mode, the graphics card monitors GPU usage and seamlessly switches between modes based on the performance demands of the application. This allows the GPU to always use the minimum amount of power required to run a given application. This mode is recommended by NVIDIA for best overall balance of power and performance. If the power management mode is set to Adaptive, the value of this measure will be Supported. Alternatively, you can set the Power Management Mode to Maximum Performance. This mode allows users to maintain the card at its maximum performance level when 3D applications are running regardless of GPU usage. If the power management mode of a GPU is Maximum Performance, then the value of this measure will be Maximum. The numeric values that correspond to these measure values are discussed in the table below:

Note: By default, this measure will report the Measure Values listed in the table above to indicate the power management status. In the graph of this measure however, the same is represented using the numeric equivalents only.

|

||||||||

|

Power limit: |

Indicates the power limit configured for this GPU of this VM/virtual desktop. |

Watts |

This measure will report a value only if the value of the ‘Power management’ measure is ‘Supported’. The power limit setting controls how much voltage a GPU can use when under load. Its not advisable to set the power limit at its maximum – i.e., the value of this measure should not be the same as the value of the Max power limit measure - as it can cause the GPU to behave strangely under duress. |

||||||||

|

Default power limit: |

Indicates the default power management algorithm’s power ceiling for this GPU. |

Watts |

This measure will report a value only if the value of the ‘Power management’ measure is ‘Supported’.

|

||||||||

|

Enforced power limit: |

Indicates the power management algorithm’s power ceiling for this GPU of this VM/virtual desktop. |

Watts |

This measure will report a value only if the value of the ‘Power management’ measure is ‘Supported’. The total board power draw is manipulated by the power management algorithm such that it stays under the value reported by this measure. |

||||||||

|

Min power limit: |

The minimum value that the power limit be set to for this GPU of this VM/virtual desktop. |

Watts |

This measure will report a value only if the value of the ‘Power management’ measure is ‘Supported’.

|

||||||||

|

Max power limit: |

The maximum value that the power limit for this GPU of this VM/virtual desktop can be set to. |

Watts |

This measure will report a value only if the value of the ‘Power management’ measure is ‘Supported’. If the value of this measure is the same as that of the Power limit measure, then the GPU may behave strangely. |

||||||||

|

Core clock: |

Indicates current frequency of the graphics clock on this GPU of this VM/virtual desktop. |

MHz |

GPU has many more cores than your average CPU but these cores are much simpler and much smaller so that many more actually fit on a small piece of silicon. These smaller, simpler cores go by different names depending upon the tasks they perform. Stream processors are the cores that perform a single thread at a slow rate. But since GPUs contain numerous stream processors, they make overall computation high. The streaming multiprocessor clock is how fast the stream processors run. The memory clock is how fast the memory on the card runs. The GPU core clock is the speed at which the GPU assigned to the VM/virtual desktop operates. By correlating the frequencies of these clocks – i.e., the value of these measures - with the memory usage, power usage, and overall performance of the GPU, you can figure out if overclocking is required or not. Overclocking is the process of forcing a GPU core/memory to run faster than its manufactured frequency. Overclocking can have both positive and negative effects on GPU performance. For instance, memory overclocking helps on cards with low memory bandwidth, and with games with a lot of post-processing/textures/filters like AA that are VRAM intensive. On the other hand, speeding up the operation frequency of a shader/streaming processor/memory clock, without properly analyzing its need and its effects, may increase its thermal output in a linear fashion. At the same time, boosting voltages will cause the generated heat to sky rocket. If improperly managed, these increases in temperature can cause permanent physical damage to the core/memory or even “heat death”. Putting an adequate cooling system into place, adjusting the power provided to the GPU, monitoring your results with the right tools and doing the necessary research are all critical steps on the path to safe and successful overclocking. These measures will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. |

||||||||

|

Memory clock: |

Indicates current memory clock frequency on this GPU of this VM/virtual desktop. |

MHz |

|||||||||

|

Streaming multiprocessor clock: |

Indicates the current frequency of the streaming multiprocessor clock on this GPU of this VM/virtual desktop. |

MHz |

|||||||||

|

Frame rate: |

Indicates the rate at which frames are processed by this GPU of this VM/virtual desktop. |

Frames/Sec |

FPS is how fast your graphics card can output individual frames each second. It is the most time-tested and ideal measure of performance of a GPU. Higher the value of this measure, healthier is the GPU. This measure will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. |

||||||||

|

Fan speed: |

Indicates the percent of maximum speed that this GPU’s fan is currently intended to run at. |

Percent |

This measure will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. The value of this measure could range from 0 to 100%. An abnormally high value for this measure could indicate a problem condition – eg., a sudden surge in the temperature of the GPU that could cause the fan to spin faster. Note that the reported speed is only the intended fan speed. If the fan is physically blocked and unable to spin, this output will not match the actual fan speed. Many parts do not report fan speeds because they rely on cooling via fans in the surrounding enclosure. By default the fan speed is increased or decreased automatically in response to changes in temperature. |

||||||||

|

Compute processes: |

Indicates the number of processes having compute context on this GPU of this VM. |

Number |

Use the detailed diagnosis of this measure to know which processes are currently using the GPU. The process details provided as part of the detailed diagnosis include, the PID of the process, the process name, and the GPU memory used by the process. Note that the GPU memory usage of the processes will not be available in the detailed diagnosis, if the Windows platform on which XenApp operates is running in the WDDM mode. In this mode, the Windows KMD manages all the memory, and not the NVIDIA driver. Therefore, the NVIDIA SMI commands that the test uses to collect metrics will not be able to capture the GPU memory usage of the processes. |

||||||||

|

Volatile single bit errors: |

Indicates the number of volatile single bit errors in this GPU of this VM/virtual desktop. |

Number |

This measure will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. Volatile error counters track the number of errors detected since the last driver load. Single bit ECC errors are automatically corrected by the hardware and do not result in data corruption. Ideally, the value of this measure should be 0. |

||||||||

|

Volatile double bit errors: |

Indicates the total number of volatile double bit errors in this GPU of this VM/virtual desktop. |

Number |

This measure will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. Volatile error counters track the number of errors detected since the last driver load. Double bit errors are detected but not corrected. Ideally, the value of this measure should be 0. |

||||||||

|

Aggregate single bit errors: |

Indicates the total number of aggregate single bit errors in this GPU of this VM/virtual desktop. |

Number |

This measure will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. Aggregate error counts persist indefinitely and thus act as a lifetime counter. Single bit ECC errors are automatically corrected by the hardware and do not result in data corruption. Ideally, the value of this measure should be 0. |

||||||||

|

Aggregate double bit errors: |

Indicates the total number of aggregate double bit errors in this GPU of this VM/virtual desktop. |

Number |

This measure will be reported only if the ‘Dedicated GPU’ or ‘GPU Pass-through’ technology is used to deliver GPU resources to virtual desktops/VMs. Aggregate error counts persist indefinitely and thus act as a lifetime counter. Double bit errors are detected but not corrected. Ideally, the value of this measure should be 0. |

||||||||

|

Mode: |

Indicates the mode using which the GPU resources were delivered to this VMs. |

|

The values that this measure can take and their corresponding numeric values are as follows:

Note: By default, this test reports the Measure Values listed in the table above to indicate the mode of GPU delivery. In the graph of this measure however, the same is represented using the numeric equivalents only. |

||||||||

|

Physical GPU compute utilization: |

Indicates the proportion of time over the past sample period during which one or more kernels were executing on the physical GPU of this VM/virtual desktop. |

Percentage |

This measure will report metrics only for the Tesla GPU card. A value close to 100% indicates that the physical GPU is busy processing graphic requests from this VM almost all the time. In a Shared vGPU environment a vGPU may be in use almost all the time, if the VM/virtual desktop it is allocated to run graphic-intensive applications. A resource-hungry VM/virtual desktop on a server can impact the performance of other VMs/virtual desktops on the same server. If you find that only a single VM/virtual desktop has been consistently hogging the GPU resources, you may want to switch to the Dedicated GPU mode, so that excessive GPU usage by that VM/virtual desktop has no impact on the performance of other VMs/virtual desktops on that host. If all GPUs assigned to a VM/virtual desktop are found to be busy most of the time, you may want to consider allocating more GPU resources to that VM/virtual desktop. |